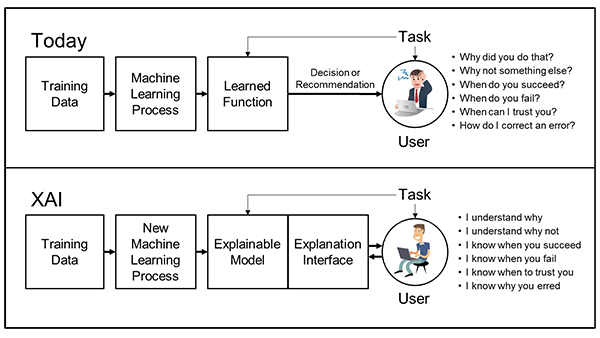

Explainable AI (XAI) refers to Artificial intelligence systems that are able to communicate their internal models and functioning to humans.

See also Integrating symbols into deep learning for some discussion and ideas.

DARPA is starting a projecg for this: article

Cognitive Machine Learning (1): Learning to Explain

Feature visualization

Interpreting Deep Neural Networks with SVCCA

Any sufficiently complex solution becomes incomprehensible to a fixed type of intelligence. Any sufficiently complex problem requires a solution complex enough that is incomprehensible.

Maybe, regarding neural networks/deep learning for AI, we either: * Decide we aren't gonna tackle the most difficult problems (not gonna happen) * Give up on needing to understand our solutions to problems (extreme pragmatism) * Become more intelligent to have some chance to understand the solutions, though there will always be things beyond our grasp. (neuralink/transhuman approach)