A kind of Recurrent neural network, Neural networks with memory

Understanding LSTMs

Video Backpropogating an LSTM: A Numerical Example

Introduced in Hochreiter & Schmidhuber (1997)

explanation implementing in tensorflow

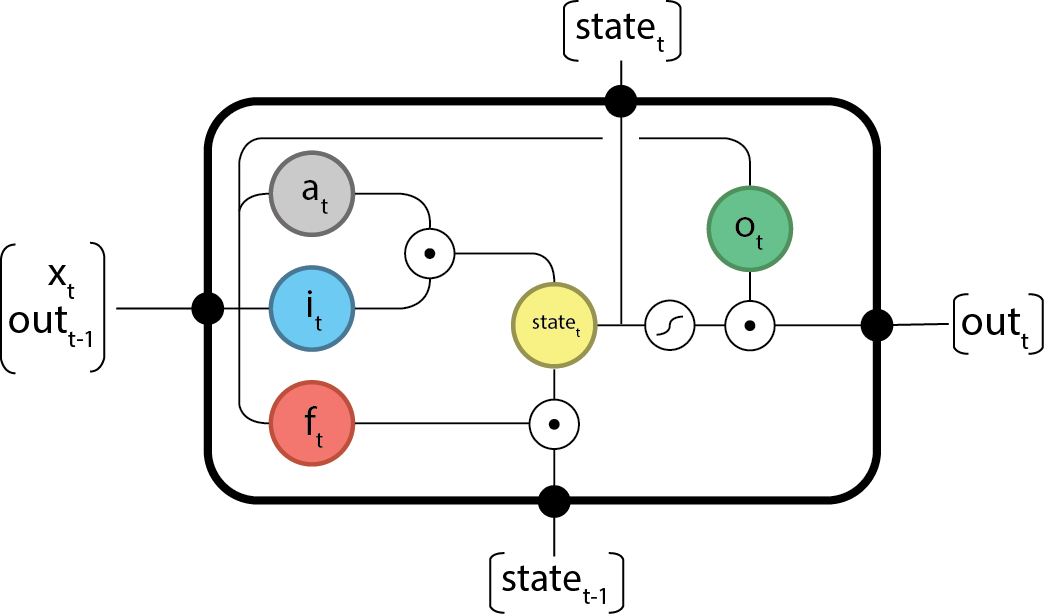

A crucial innovation to recurrent networks was the Long Short-Term Memory (LSTM) (Hochreiter and Schmidhuber, 1997). This very general architecture was developed for a specific purpose, to address the “vanishing and exploding gradient” problem (Hochreiter et al., 2001a), which we might relabel the problem of “vanishing and exploding sensitivity.” LSTM ameliorates the problem by embedding perfect integrators (Seung, 1998) for mem- ory storage in the network. The simplest example of a perfect integrator is the equation x(t+ 1) =x(t) +i(t), where i(t) is an input to the system. The implicit identity matrix Ix(t) means that signals do not dynamically vanish or explode. If we attach a mechanism to this integrator that allows an enclosing network to choose when the integrator listens to inputs, namely, a programmable gate depending on context, we have an equation of the form x(t+ 1) =x(t) +g(context)i(t). We can now selectively store information for an indefinite length of time.

From here